We are very excited to announce that the LEEAF project by Spark Works has completed the DESIGN and EXPERIMENT stages of the IoT-NGIN ‘Next Generation IoT as part of Next Generation Internet’ 2nd Open Call programme. The IoT-NGIN 2nd Open Call programme invited SMEs active in developing IoT applications to implement innovative IoT applications that use heterogeneous IoT and IoT-NGIN components to offer new services and validate the IoT-NGIN components. Spark Works was among the 10 SMEs that have been selected and added to the IoT-NGIN family to benefit from IoT-NGIN innovations.

LEEAF stands for machine-Learning Edge Enabled Autonomous tree inFection detection, and our project aimed to use state-of-the-art technologies, including computer vision, machine learning, artificial intelligence, and Unmanned Aircraft Systems (UASs), to facilitate the process of inspecting olive groves regardless of their size or location. LEEAF can thus help olive farmers target treatments and increase profitability. Farmers can use drones or even the cameras on their smartphones to inspect the trees in their olive groves and, with the help of our ML & AI models, can easily understand the condition of their trees and any threats to their yields.

Our initial work was based on existing open-source image datasets (like the Olive Leaf Dataset and Plant Village), but in the course of the LEEAF project, we realized that we needed to build our image dataset with more complex images in more diverse conditions. Thus, we successfully gathered an initial collection of images composed of individual leaves, small branches, substantial segments of olive trees, and depictions of olive trees. The dataset is available on GitHub as an open-source dataset, and we constantly update it with new sources and evaluation results of our analysis and experiments.

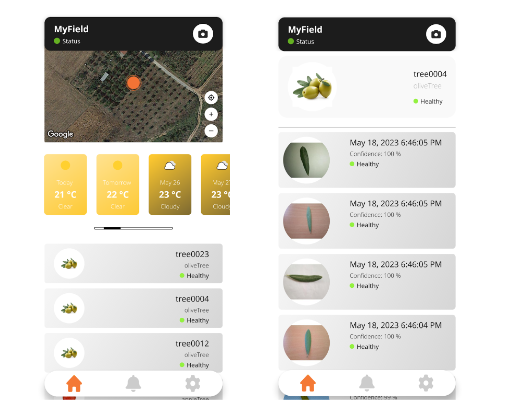

The LEEAF application is the main gateway for users to review and inspect the results from the autonomous flights performed in their groves and see the suggestions generated by the LEEAF AI. The home view represents the olive grove and gives the farmer basic information about their property, weather forecast information for the next seven days, and the number of trees registered in the LEEAF platform. For each of the trees, the user gets an indication of their health status, calculated based on aggregating the results of images analyzed by LEEAF AI. Users also have the option to submit images directly for inspection through the mobile phone’s camera. For that operation, the user may click the camera icon on the top right corner of the screen and open the phone’s camera to take a picture of the leaf/leaves they want to inspect. The image is then directly uploaded to LEEAF, and leaves are automatically identified in the image, extracted and analyzed using the respective ML model. The analysis results appear in the next minutes in the tree’s view. The next application view focuses on each leaf detected by our application. In this view, the user can see the date of the photo, its recorded name, and the classification status.

The LEEAF framework and the results of our evaluation have also been presented at the International Symposium on Algorithmic Aspects of Cloud Computing (ALGOCLOUD’23), held September 5, 2023, in Amsterdam under the title “Olive Leaf Infection Detection using the Cloud-Edge Continuum” and received the Best Student Paper Award!

Check out this video to learn more:

If you want more content like this, subscribe to our newsletter or follow us!